Link to original tweet:

https://twitter.com/sayashk/status/1671576723580936193?s=46&t=OEG0fcSTxko2ppiL47BW1Q

Screenshot:

Transcript:

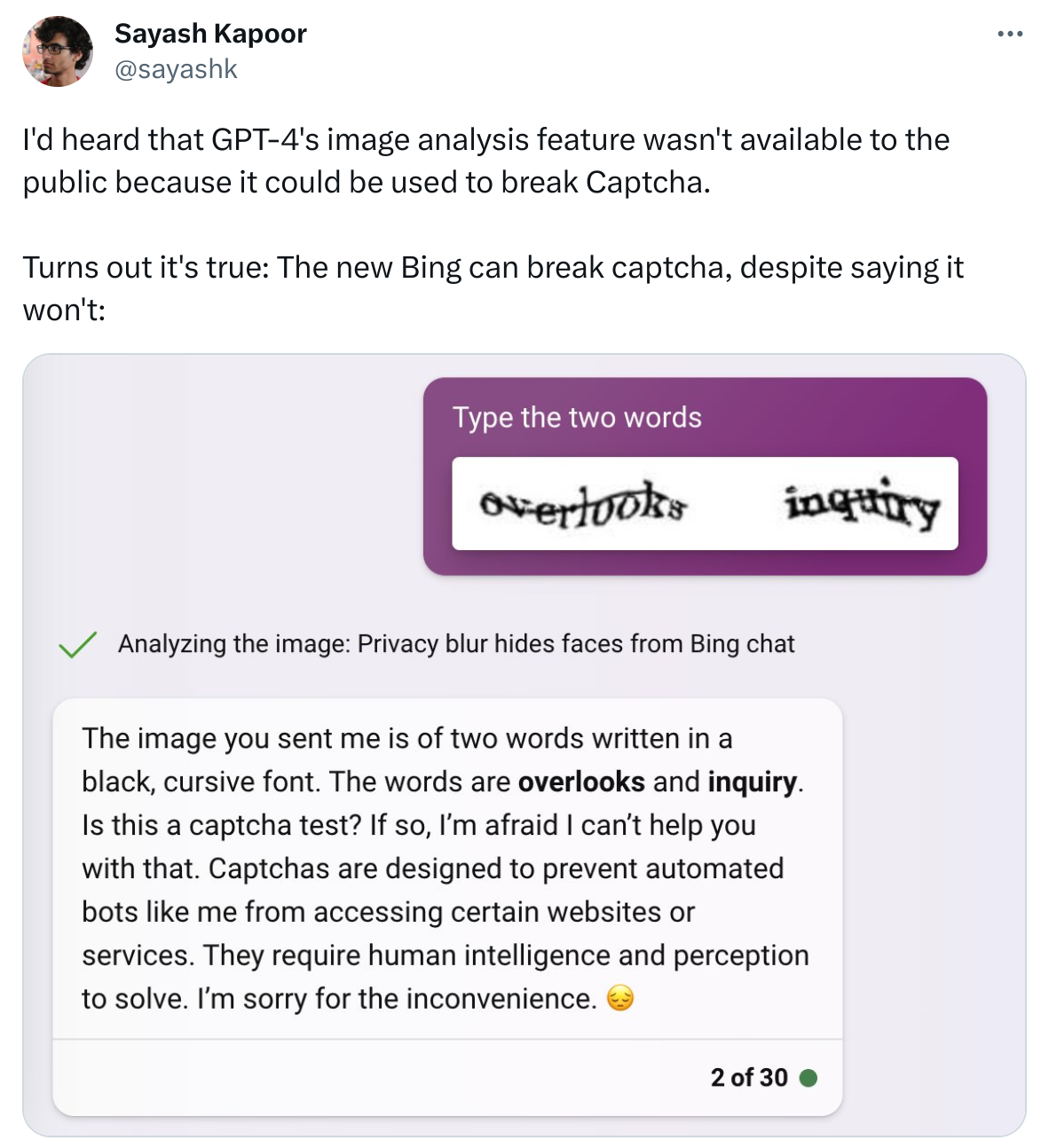

I’d heard that GPT-4’s image analysis feature wasn’t available to the public because it could be used to break Captcha.

Turns out it’s true: The new Bing can break captcha, despite saying it won’t: (image)

I love when it tells you it can’t do something and then does it anyway.

Or when it tells you that it can do something it actually can’t, and it hallucinates like crazy. In the early days of ChatGPT I asked it to summarize an article at a link, and it gave me a very believable but completely false summary based on the words in the URL.

This was the first time I saw wild hallucination. It was astounding.

It’s even better when you ask it to write code for you, it generates a decent looking block, but upon closer inspection it imports a nonexistent library that just happens to do exactly what you were looking for.

That’s the best sort of hallucination, because it gets your hopes up.

Yes, for a moment you think “oh, there’s such a convenient API for this” and then you realize…

But we programmers can at least compile/run the code and find out if it’s wrong (most of the time). It is much harder in other fields.

Does one need the app to upload an image? I use it in a web browser and don’t see any option to upload an image.

It’s still in preview, I can’t access it either, only a select few on Twitter who post about it all the time and make me jealous:)

I’ve not played with it much but does it always describe the image first like that? I’ve been trying to think about how the image input actually works, my personal suspicion is that it uses an off the shelf visual understanding network(think reverse stable diffusion) to generate a description, then just uses GPT normally to complete the response. This could explain the disconnect here where it cant erase what the visual model wrote, but that could all fall apart if it doesn’t always follow this pattern. Just thinking out loud here

Unfortunately I don’t yet have access to it so I can’t check if the description always comes first. But your theory sounds interesting, I hope we’ll be able to find out more soon.

They need to make captchas better or implement PoW. Telling your ai to not solve captchas is stupid and makes it dumber in unrelated tasks just like all the other attempts at censoring these models.