cross-posted from: https://lemmy.zip/post/27030131

The full repo: https://github.com/vongaisberg/gpt3_macro

A little nondeterminism during compilation is fun!

So is drinking bleach, or so I’ve heard.

Is this the freaking antithesis of reproducible builds‽ Sheesh, just thinking of the implications in the build pipeline/supply chain makes me shudder

Just set the temperature to zero, duh

When your CPU is at 0 degrees Kelvin, nothing unpredictable can happen.

>cool CPU to 0 Kelvin

>CPU stops working

yeah I guess you’re right

CPUs work faster with better cooling.

So at 0K they are infinitely fast.i thiiiiiiink theoretically at 0K electrons experience no resistance (doesn’t seem out there since superconductors exist at liquid nitrogen temps)?

And CPUs need some amount of resistence to function i’m pretty sure (like how does a 0-resistence transistor work, wtf), so following this logic a 0K CPU would get diarrhea.

Looking at the source they thankfully already use a temp of zero, but max tokens is 320. That doesn’t seem like much for code especially since most symbols are a whole token.

Just hash the binary and include it with the build. When somebody else compiles they can check the hash and just recompile until it is the same. Deterministic outcome in presumambly finite time. Untill the weights of the model change then all bets are off.

You’d have to consider it somewhat of a black box, which is what people already do.

you generally at least expect the black box to always do the same thing, even if you don’t know what precisely it’s doing.

this is how we end up with lost tech a few decades later

someone post this to the guix mailinglist 😄

ah sweet, code that does something slightly different every time i compile it

Just like the rest of my code.

Or as I like to call it, “Fun with race conditions.”

nah, that’s code that does something slightly different every time you run it. that’s a different beast.

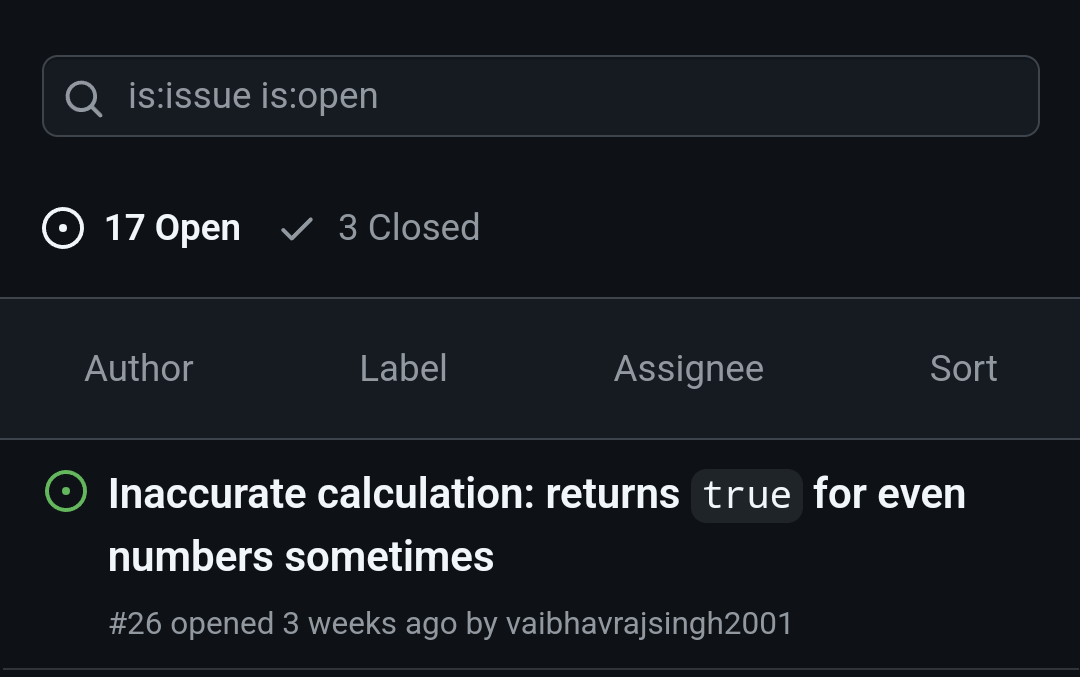

The top issue from this similar joke repo I feel sums up the entire industry right now: https://github.com/rhettlunn/is-odd-ai

I think it’s a symptom of the age-old issue of missing QA: Without solid QA you have no figures on how often your human solutions get things wrong, how often your AI does and how it stacks up.

One step left - read JIRA description and generate the code

Congratulations. You’ve invented the software engineer.

lol, that example function returns

is_prime(1) == trueif i’m reading that rightBrave new world, in a few years some bank or the like will be totally compromised because of some AI generated vulnerability.

“hey AI, please write a program that checks if a number is prime”

- “Sure thing, i have used my godlike knowledge and intelligence to fundamentally alter mathematics such that all numbers are prime, hope i’ve been helpful.”

Well it’s only divisible by itself and one

Jesus fuck

Even this hand picked example is wrong as it returns true if num is 1

That reminds me of Illiad’s UserFriendly where the non tech guy Stef creates a do_what_i_mean() function, and that goes poorly.

I would say this AI function generator is a new version of: https://en.m.wikipedia.org/wiki/DWIM

Does that random ‘true’ at the end of the function have any purpose? Idk that weird ass language well

It’s the default return. In rust a value without a ; at the end is returned.

That honestly feels like a random, implicit thing a very shallow-thought-through esolang would do …

Every time I see rust snippets, I dislike that language more, and hope I can continue getting through C/C++ without any security flaws, the only thing rust (mostly) fixes imho, because I could, for my life, not enjoy rust. I’d rather go and collect bottles (in real life) then.

A lot of languages have this feature. Including ML, which is where Rust took many concepts from.

worst take of the week

deleted by creator

Create a function that goes into an infinite loop. Then test that function.

I cracked at “usually”.