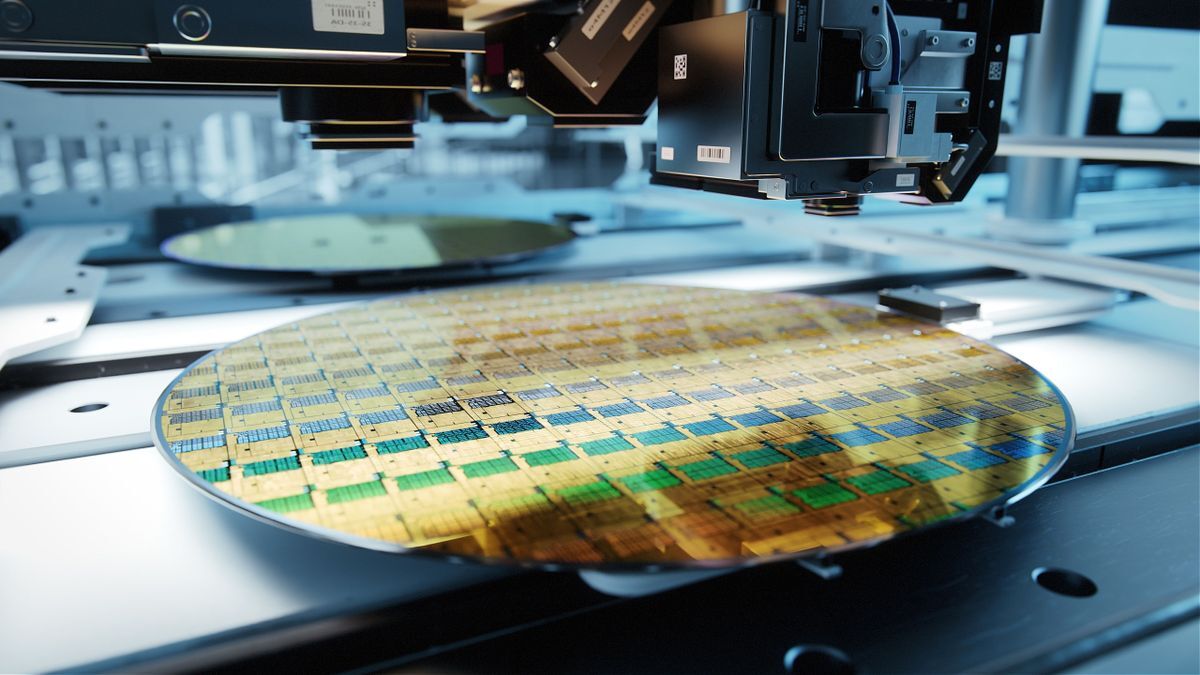

Firm predicts it will cost $28 billion to build a 2nm fab and $30,000 per wafer, a 50 percent increase in chipmaking costs as complexity rises::As wafer fab tools are getting more expensive, so do fabs and, ultimately, chips. A new report claims that

Or, and hear me out, we could just write less shitty software…

You’re right. This is the biggest issue facing computing currently.

The ratio of people who are capable of writing less-shitty software to the number of things we want to do with software ensures this problem will not get solved anytime soon.

The ratio of people who are capable of writing less-shitty software to the number of things we want to do with software ensures this problem will not get solved anytime soon.

Eh I disagree. Every software engineer I’ve ever worked with knows how to make some optimizations to their code bases. But it’s literally never prioritized by the business. I suspect this will shift as IaaS takes over and it’s a lot easier to generate the necessary graphs showing the stability of your product being maintained while the consumed resources has been reduced.

But what if i want to do all my work inside a JavaScript “application” inside a web browser inside a desktop?

(We really do have do much CPU power these days that we’re inventing new ways to waste it…)

But where’s the fun in that?

As long as humans have some hand in writing and designing software we’ll always have shitty software.

While I agree with the cynical view of humans and shortcuts, I think it’s actually the “automated” part of the process to blame. If you develop an app, there’s only so much you can code. However if you start with a framework, now you’ve automated part of your job for huge efficiency gains, but you’re also starting off with a much bigger app and likely lots of functionality you aren’t really using

I was more getting at with software development it’s never just the developers making all of the decisions. There are always stakeholders who often force time and attention to other things and make unrealistic deadlines, while most software developers I know would love to be able to take the time to do everything the right way first.

I also agree with the example you provided. Back when I used to work on more personal projects I loved it when I found a good minimal framework that allowed you to expand it as needed so you rarely ever had unused bloat.

If you’re not using the functionality it’s probably not significantly contributing to the required CPU/GPU cycles. Though I would welcome a counter example.

And NVIDIA will use this as an excuse to hike up their prices by 100+%.

On a serious note, this will progressively come down in price as time passes, plus not everyone needs to use 2nm cutting edge technology. Plus transition to 2nm will also increase the density, so comparing wafer prices without acknowledging the increased density is not giving you the whole picture.

Plus DRAM scaling is becoming cumbersome and a lot more components cannot scale to 2nm, so 2nm is mostly a marketing term, and there are a lot of challenges that make this tech so expensive and difficult to design and produce.

Afaik 2nm is the theoretical limit for current transistor tech so this sort of end-game for this type of tech.

2nm process doesn’t actually mean 2nm though. Hasn’t in over a decade.

The current 3nm process has a 48nm gate pitch and a 24nm metal pitch. The 2nm process will have a 45nm gate pitch and a 20nm metal pitch.

“Nm” is just “generation” today. After 5nm was 3nm, next is 2nm, then 1nm. They’ll change the name after that even though they’re still nowhere near actual nm size.

Where can I read more about this?

Depending on how in-depth you want to delve into this.

Newsletter semianalysis.com

Youtube Asianometry

Wikipedia

Some litography university textbooks. Sadly I don’t know which ones.

Intel already has plans to name the further generations xxA, after Angstroms

Yeah I’m a bit curious what the marketing will be as they have to get more vertical, 3D. Will there be naming to reflect that or will they just follow existing naming, 0.5nm?

I didn’t think the ~5nm limit could be broke due to quantum tunneling.

The nm number is just the smallest part on the waffer. It’s not actually the transistor.

They solved this problem by making the nanometer bigger.

This was my understanding as well: That beyond ~7nm the reliability begins to lose value because the diameter of an electron ‘orbit’ or whatever becomes a factor.

Admittedly I’m not an expert. But my understanding was that to break this limitation and keep Moore’s law were kinda leaning into quantum computation to eventually fill the incoming void.

The reason you mean is quantum tunneling. Essentially, at that small a scale an electron can ‘teleport’ outside of the system, which is obviously a big nono for computing.

Your device will be 11% faster and the battery will last 6% more but it will dramatically change the way you interact with your device.

And cost 4000% more.

If it’s enough to run on-device ai, it’s a win. Imagine autocorrect being able to mangle your texting without ever connecting to the cloud. Huge prvacy win.

With the goggles coming soon, I think they’ll focus chip improvements on GPU and neural engine to better support that

Autocorrect doesn’t send anything to the cloud, it’s just a dictionary. If your keyboard is sending your texts to the cloud you have to change your keyboard, not run AI. AI doesn’t do autocorrect, it could maybe do word suggestions but would be super inefficient at it and probably not much better than current methods.

I’m writing thins on a 22 nm CPU and the letter appear hella fast.

deleted by creator

Not so far fetched:

“I predict in 100 years computers will be twice as powerful, 10,000 times larger, and only the five richest kings of Europe will own one.”

Use that money to speed the process of quantum computing so it will make these transistor chips obsolete

Quantum computing wouldn’t make these transistors obsolete.

Quantum computing is only really good at very specific types of calculations. You wouldn’t want it being used for the same type of job that the CPU handles.

So it will not run vim?

Quantum computers are only useful where you don’t deliberately want decoherence. Decoherence means an operation when you erase a bit, like for example when you overwrite a memory bit with a new value. This requires dissipation of energy and interaction with the outside world to reject the heat of the calculation to. While in principle a quantum computer can do a calculation that a classical computer can do, it would not be useful unless it was observed and this happens pretty much every time a logic gate output flips in a classical computer.

I know you’re joking, but I feel like answering anyway.

I’m sure you could get it to do that if you forced that through engineering, but it wouldn’t be anywhere near as efficient as just using a CPU.

CPUs need to be able to handle a large number of instructions quickly one after the next, and they have to do it reliably. Think of a CPU as an assembly line, there are multiple stages for each instruction, but they are setup so that work is already happening for the next instruction at each step (or clock cycle). However, if there’s a problem with one of the stages (or a collision) then you have to flush out the entire assembly line and start over on all of the work among all of the stages. This wouldn’t be noticeable at all to the user since the speed of each step/clock cycle is the speed of the CPU in GHz, and there are only a few stages.

Just like how GPUs are excellent at specific use cases, quantum processing will be great at solving complex problems very quickly. But, compared to a CPU handling the mundane every day instructions, it would not handle this task well. It would be like having a worker on the assembly line that could do everything super quickly… but you would have to take a lot more time to verify that the worker did everything right, and there would be a lot of times that things were done wrong.

So, yeah, you could theoretically use quantum processing for running vim… but it’s a bad idea.

Quantum computing is useless in most cases because of how fragile and inaccurate it can be, due in part to the near zero temperatures they are required to operate at.