cross-posted from: https://lemmy.dbzer0.com/post/95652

Hey everyone, you may have noticed that some of us have been raising alarms about the amount of spam accounts being created on insufficiently protected instances.

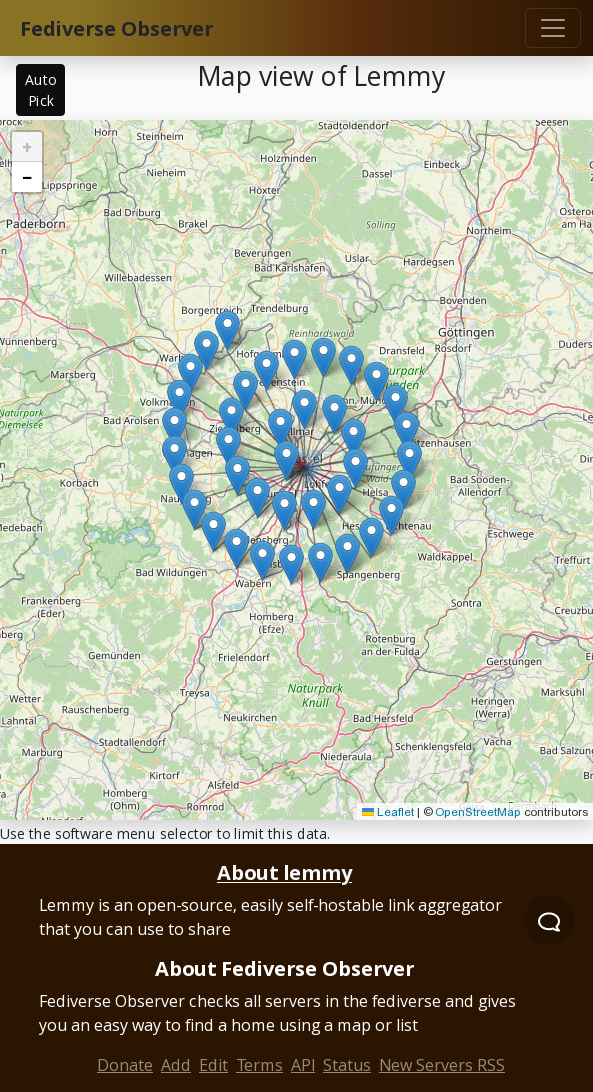

As I wanted to get ahead of this before we’re shoulders deep in spam, I developed a small service which can be used to parse the Lemmy Fediverse Observer and retrieve instances which are suspicious enough to block.

The Overseer provides fully documented REST API which you can use to retrieve the instances in 3 different formats. One with all the info, one with just the names, and one as a csv you can copy-paste into your defederation setting. You can even adjust the level of suspicion you want to have.

Not only that, I also developed a python script which you can edit and run and it will automatically update your defederation list. You can set that baby to run on a daily schedule and it will take care that any new suspicious instances are also caught and any servers that cleared up their spam accounts will be recovered.

I plan to improve this service further. Feel free to send me ideas and PRs.

A funny result is the accumulation around server centers, here Hetzner.

That’s a bit too ordered lol

Thank you for trying to get ahead of the spam influx. Trying to beat the spambots is a thankless task, but thank you anyhouw.

What about people like me who host an instance just for themselves, but don’t have any communities in their own instance? What’s the risk for me for getting blacklisted? I didn’t find an clear answer by looking at the source code.

I assume the sus score would remain low, it seems to be looking for high number of accounts with extremely low posts and no/open registration

You have one user and has many comments and post as you make yourself. This means that your suspicion score is going to be extremely low (under .5 if you post 2 times on any server).

Feels like an instance per bot would be pretty wasteful, so single user instances shouldn’t be considered suspicious. But maybe it’s more scalable than I’d think.

Maybe I’m being stupid, but how does this service actually determine suspicious-ness of instances?

If I self-host an instance, what are my chances of getting listed on here and then unilaterally blocked simply because I have a low active user count or something?

Important question; author kind of answers here:

https://lemmy.dbzer0.com/comment/204729

If I were to rely on this for my instance, I would require that it be completely transparent and open source. It doesn’t look like this is; you have to trust that it is making good selections, and give it power over your federation status. It’s a dangerous tool, IMO, but I can understand why it would have appeal right now.

I would require that it be completely transparent and open source. It doesn’t look like this is

Are you sure? Kinda seems like it is.

“The Lemmy Overseer” as I understand it is a backend service that gives us an API to use.

There is an open-source script for interacting with it. However, it does not tell you how that backend service works, exactly. It’s a black box with well defined interfaces, best case, as I understand it.

The python script only uses the open source Lemmy API. Everything else is contained within its few lines of code.

It’s based on dynamic count. For now it’s a very simple how many users per post there are and each instance can set their own threshold for it.

It’s not about few users, it’s about a tons of users and no activity. If you have 5000 users and 3 posts, it’s likely those are all spam accounts. This is what we’re checking for right now.

This is not a manual process currently, but I’m planning to add the possibility to whitelist and blacklist instances manually in the future.

Isn’t it trivial for bot farms to just spam posts on their home instance? And how does this handle cases where the number of posts is zero?

Ideally the list of behaviors which trigger suspicion would be expanded over time, yes? Low hanging for first, just because it’s easy doesn’t mean spammers will program around it unless we check for it.

Looks like a very cool project, thanks for building it and sharing!

Based on the formula you mentioned here, it sounds like an instance with one user who has posted at least one comment will have a maximum score of 1. Presumably the threshold would usually be set to greater than 1, to catch instances with lots of accounts that have never commented.

This has given me another thought though: could spammers not just create one instance per spam account? If you own something like blah.xyz, you could in theory create ephemeral spam instances on subdomains and blast content out using those (e.g. spamuser@esgdf.blah.xyz, spamuser@ttraf.blah.xyz, etc.)

Spam management on the Fediverse is sure to become an interesting issue. I wonder how practical the instance blocking approach will be - I think eventually we’ll need some kind of portable “user trustedness” score.

yes, constantly adding new domains and spamming with them is a probably vector, but I’m not quite sure if that works due to how federation works. I am not quite that familiar with the implementations.

“Lemmy Overseer “ is a creepy, ominous name. It sounds like the job title of some super strict, rules-obsessed, joyless office drone who works for the feds or a large corporation.

This kind of talk really displeases the Overseer. You better watch yourself.

yeah it’s great isn’t it‽

I feel like the python script is maybe a bit too extreme ? 20 times more users than posts might happen on smaller instances people use mostly to browse the big ones, I feel. I ran it with a suspicion ratio of 100 and it didn’t seem to block any “legit looking communities”. But then again, it is very hard to tell.

Thanks a lot for your work !

Yes, that’s why I leave the exact number up to each instance admin

Indeed, thanks a million for giving the community tools at this critical juncture. Very much appreciated!

Sounds great, especially for instances with open registration!

Awesome work! Hope kbin gets API soon so it’ll be possible there

I really don’t love this. Couldn’t we extend the mastodon blocklist to cover Lemmy somehow? I don’t like automated blocking. I’d much rather find a list of trusted admins, and defederate with whatever 60 percent of them defederate with.

I’m not going to force anyone to use this, but at the scale these spam accounts are increasing, we need something now.

Agreed. If a better system comes along, great but in the meantime I am glad to have this kind of initiative to prevent us from being swarmed.

Excellent! Thank you for doing this!

I’m loving the innovation in the fediverse, and all the people seeing issues, bringing them up, and people at least attempting to fix.

Thanks for the awesome work! I love seeing the OSS community building tooling for this stuff :D

However it does not look like it is open source.

Isn’t it? I had to go to the Python script and go up to find the main repo https://github.com/db0/lemmy-overseer

It’s also possible I’m missing something lol

Then maybe I got confused sorry. Somebody mentioned it and then the post was saying it’s a service I thought it wasn’t open. Will check it properly later. Shouldn’t have spoke so quickly I guess

yea my bad, it looks open source :D

It’s all good :) I had to dig a little myself to find it haha

maybe that’s a dumb question, so sorry for that, but wouldn’t it be easier to just use a whitelist for federation instead of a blocklist?

That would lead to having to manually approve every instance by the admins. Mods can’t do that so it would 100% be on them to keep up with all the instances

Mostly because it would invalidate any single user self hosted instance, which I fin is one of the big draws of the fediverse in general.

Thank you, I just set up a SystemD timer for the Python script.

That’s a cool idea. Thanks for your work!

One question: Let’s say the script defederates an instance and then the admin of that instance cleans up their database and makes sure no new bots can register. Would your script remove them from the blacklist or would I have to manually check if all instances on my blacklist are still “infested” to give them a second chance?

If you run my script in a schedule it should remove them from the defederation list yes