For those who find it interesting, enjoy!

I really enjoy your transparency and style of communication!

Comparing it to Spez and how Reddit became prior to the migration, this is such a refreshing change

/u/Ruud is like /u/Spez but only if /u/Spez was actually cool.

Sooo… /u/Ruud is nothing like /u/Spez? Same energy as “Communism is like Capitalism but only if Capitalism got rid of the concept of capital”.

Yes that was the joke.

It gets me every time seeing people using the product I build 🥹

You worked on Grafana? Your product is awesome, I use it in my homelab for performance metrics

Yes, I’m one of the designers 👍🏾

deleted by creator

Poggers. Couldn‘t live wuthout it. Thank you for your work!

Love Grafana, especially the new UI. Great work, man. :)

That’s so cool! Grafana is awesome, the whole team did a great job

Thank you very much.

Do you work on Loci too?

Grafana is one of those tools which everyone should use if they have something they maintain themselves. Superb tool.

Grafana is the most essential application in my job. I can use Notepad to code in a world without IDEs. I couldn’t keep a damn thing running in the real world without Grafana. And I’ve been forced against my will to use alternatives in the past.

How did you learn it?

Basically brute force, I’m not great with it but I was the one on my team responsible for setting up our dashboards. I wrote the prometheus metric collection in our microservices and built the dashboards from that data.

There are tons of free dashboards though for monitoring resources and such so a lot of things I use are just downloaded from the Grafana website. And the docs are good too. So looking at examples + documentation is how I learn. It would be helpful if I was better with math though.

I guess it’s time to start browsing the dashboards thanks

You work at Grafana?

It’s been very snappy today, nice work! Is it all under Docker Compose with the node handling Nginx and Postgres as well?

Yes.

Why did you guys roll back the UI to .7 from .10? I enjoyed some of the UI improvements, but I guess there were some bugs?

Edit: I see its back to .10 maybe I had a browser tab open from before that I never refreshed

I‘m really grateful for your and your colleagues‘ work. Thank you for letting us lemmy around here!!!

I can’t believe how fast you’ve managed to crowdsource and fix things on this instance. I haven’t seen many problems at all sharing comments and things.

Dang that’s a lot of RAM

mastodon.world has the same server but with twice the RAM :-)

What chassis? I’ve got 256GB in an R720 but only 32 cores here!

It’s a AX161 server at Hetzner

€142 is more reasonable than I expected! I’ll toss some cash to help!

You should see some of our VM hosts at work…

This is awesome! As a systems engineer for my day job, I love seeing stuff like this!

31.2% load it’s damn fine considering how much attention Lemmy.world has been getting lately. Server is up for 3 weeks already so I guess that’s when you upgraded it?

Damn that’s a huge chunk of (what looks like) a 64 core CPU there. Impressive!

It’s cool it can aggressively cache that much. Although I am perplexed why one would have a swap file configured in this case? What does it give you here? Sorry not trying to be an elitist or anything just have no idea what advantage you get!

To be honest I tend to use swap less and less. But this was in the build that Hetzner does and I didn’t remove it.

If your application goes wild with RAM usage, a properly configured swap will make sure the underlying OS remains responsive enough to deal with it.

The OOM killer is usually triggered after it starts hitting the disk. Which means your system is unresponsive for a long time until it finally kills something.

Using something like oomd can help trigger before it hits swap but then why are you using swap in the first place?

The bigger issue is that the kernel sometimes ignores the swappiness and will evict code/data pages long before file cache even when set to 0 or 1. I’m still not sure if that was because of an Ubuntu patch or if it was an issue that’s been resolved in the years since I last saw this

How much is that in beans?

At least 1

Possibly 2

Let’s not go crazy

About tree fiddy

How far do you see lemmy.world capable of scaling to? One thing I’ve been noticing is the centralisation of Lemmy users on a few top servers, surely that cannot be healthy for federation? What are your thoughts on this?

@ruud@lemmy.world They should post their clustered setup so others can replicate more easily. It sounded like they had several webservers in front of a database (hopefully a cache box too).

Not entirely sure of what you are asking, but the only reason they need a clustered setup is simply because of their scale. Making the details of their setup public does not help with the issue I addressed, since in an ideal scenario, communities and users would be evenly distributed amongst the many Lemmy instances in the fediverse, making the need to do any sort of clustering for performance reasons unnecessary.

What I mean is if they post the specifics on how they setup a cluster of servers, other instance operators or people who want to start their own instance could more easily do so or just get off a single server configuration. No Lemmy instance should really be running on a single non-redundant box anyways, even if it’s only 2 small servers.

We do run on 1 server, but we’ve now seen that Lemmy scales horizontally so the k8s path forward is open 😊 With all these latest improvements we can have a bit more users on the current box.

Oh, I could a swore I read somewhere you went multi. Maybe I’m confusing another instance

Not trying to be pedantic, but why do they have to do so? Why can’t people figure it out themselves? Also, why can’t Lemmy instances run on single non-redundant boxes? Most instance operators don’t have the budget of enterprises, so why would they have to run their Lemmy’s like enterprises?

Not trying to be pedantic, but why do they have to do so? Why can’t people figure it out themselves?

Er, because we should all be working together to try to help Lemmy grow and be stable…? Because good-will and being nice and helpful to each other is intrinsically good?

Also, why can’t Lemmy instances run on single non-redundant boxes? Most instance operators don’t have the budget of enterprises, so why would they have to run their Lemmy’s like enterprises?

You can run on a single box, but a single problem will bring down your single box. This is a basic problem commonly discussed in DevOps circles.

Multi-server or containerized deploys aren’t only achievable by enterprise level companies. For example, one reasonably priced server on most providers is like $20-40/month. Say a load balancer as a service is another $10-20, and a database server or database as a service is also like $20-$40. A distributed, redundant setup would be like 2 webservers, a database, and a load balancer so like, $70? Maybe add in another server as a file host if Lemmy needs it (wordpress does iirc), or an additional caching server at a cheaper cost. And then you have a more stable service that can handle usage spikes better and users are more likely to stay around.

I’ve deployed clustered applications myself, I just haven’t looked into doing it with Lemmy and was curious if they had a run book or documentation.

Edit: or you use kubernetes or kubernetes as a service like ruud is saying they might look into. Could probably get it at the same cost.

Er, because we should all be working together to try to help Lemmy grow and be stable…?

I agree with this point, but I disagree with the context in which you mentioned, “They should post their clustered setup so others can replicate more easily”, right as a reply to my original comment asking how Ruud felt about the centralisation of users in a federated application. This should’ve been an entirely separate reply, or perhaps an issue on GitHub to the Lemmy authors.

You can run on a single box, but a single problem will bring down your single box. This is a basic problem commonly discussed in DevOps circles.

Again, I agree, but the context in which you mentioned it, basically suggests that everyone who runs single instance Lemmys are doing it wrong, which I disagree.

Lowering the entry requirements is part of how we can get wide-spread adoption of federated software. Not telling people that they have to have at least 2 instances with redundancies or they are doing it entirely wrong.

The bare minimum I would ask anyone running their own instance, is to have backups. They don’t need fancy load-balancers, or slaved Postgres database setups, or even multi-node redis caches for their instances of sub-thousand users.

For example, one reasonably priced server on most providers is like $20-40/month. Say a load balancer as a service is another $10-20, and a database server or database as a service is also like $20-$40. A distributed, redundant setup would be like 2 webservers, a database, and a load balancer so like, $70?

Seriously? That may be an acceptable price tag for a extremely public Lemmy host, like lemmy.world or lemmy.ml, but in no way should it be a reasonable price tag for the vast majority of Lemmy instances setup out there. Especially when most of them have sub-thousand users. $70/mo? That has to be a joke. You can easily host a Lemmy on a $5-$10 droplet for ~100 users.

I’ve deployed clustered applications myself, I just haven’t looked into doing it with Lemmy and was curious if they had a run book or documentation.

No offense, but you definitely seem like the kind of person to shill for cloud-scaling and disregard cost-savings.

Personally I can’t see a use-case for an instance that has ~100 users, people would just get bored and stop using it and move on to a more popular one. It’s not like a Minecraft server. Having people use a social media tool like Lemmy or a sub on Reddit is about having a critical mass of interesting content and users. But if there is such a small community, sure a single box is fine.

And load balancers are hardly fancy… if you know how to setup a webserver and write an nginx configuration, it’s like the next step of understanding. Digital ocean makes it incredibly easy.

pretty gauges. the instance seems to be more stable/responsive today

How much is this costing you? Also who is your host? Is it on a virtual machine?

They have a dedicated server: https://lemmy.world/post/75556

Whoa, cool. Thanks. Only a matter of time until it gets overloaded though. Can’t Lemmy run in a container service like Cloud Run or AWS App Runner?

Yeah, you could do it in AWS with ECS or Fargate.

https://github.com/jetbridge/lemmy-cdk

Indeed you can, very cool.

It’s actually pretty funny to see him mention the growth (almost 12k users!) considering they’ve added, what 50k or so users recently?

I signed up three days before that post. They were the largest instance with open signups. Almost 1000 users.

Dedicated means local?

No, it means it’s got the physical machine all to itself. It’s a rented server located in a Hetzner data center.

Dedicated usually means it’s not splitting cpu time with another instance. It could mean a local machine but it does not have to be one.

Tbh I’d see it hard to be local, so maybe it is cloud computing but a standalone instance as you just said.

My homies love dedicated servers

I know that the RAM cache is just taking advantage of otherwise free RAM and will be dropped in favor of anything else, but it does stress me out a bit to see it “full” like that.

It would stress me even more to see a lot of RAM doing nothing, that would be a shame! ;-)

Difference between Windows and Linux. Windows would only use what it needs. Linux pre-empts more and fills the RAM for what coul dbe needed.

It used to stress the shit out of me when I switched to Linux as I’d gotten used to opening task manager and seeing 90% free RAM. On Linux I’d be seeing 10% free and panicking thinking it was a resource hog.

The Linux-way is the best way.

I use Arch btw ;)

Both OSes do pre-caching and for both the standard tools to check usage nowadays ignore pre-cached elements when counting RAM usage.

I had a feeling that ‘factoid’ may be out of date! Since I learnt it about the time of Windows XP when we were shown examples of how Linux and Windows memory management differed. It all made sense why Linux seemed to have full RAM even after a big upgrade but WinXP gave the ‘illusion’ of having lots of free RAM to use. ~ 20yrs ago!

I think we used SuSE Linux 7.3!

I still hold a savage hatred of all RPM-based distros after dealing with the hell of early 2000’s editions (Redhat, Mandrake & Suse). Though I did like SuSE KDE’s colours when it worked!

But Windows also does pre caching?

It probably just didn’t mark that memory as “used” in the task manager.

I discovered this about 20yrs ago and there’s been a lot of drugs & drink since then.

I do remember I could open my shit-hot 256Mb RAM desktop with Windows XP taskmanager and it shows a whopping 128Mb free RAM. 😎

Then I’d boot into my ‘733T H4X0r’ Suse Linux 7.3 and top would show 5Mb free RAM. 😱

This caused much upset until I found out the two OS’s have (had?) fundamentally different memory utilisation philosophies.

May not be the case anymore but it was late 90s/early 00s.

That’s how it supposed to work, free RAM does nothing :)

It’s free real estate!

If you had this much buffer memory what are the reasons to have swap space as well?

With my servers I’m paranoid having swap enabled will inadvertently slow stuff down. Perhaps there’s a reason to have it that I’m unaware of?

If you had this much buffer memory what are the reasons to have swap space as well?

Many programs do stuff once during startup that they never do again, sometimes creating redundant data objects that will never get accessed in the configuration its being run in. Eventually the kernel memory manager figures out that some pages are never used but it can’t just delete them. If swap is enabled it can swap them to disk instead. It frees up that RAM for something more important. It’s usually minor but every few MB helps.

I personally like having some swap as during low memory situations (which lemmy gets at least once a day on my small instance) everything slows down rather than getting culled by the oom killer. It’s not a replacement for monitoring, but it does extend the timeframe to react to things.

Memcache usually takes all the assigned memory regardless of usage so seeing high usage isn’t always unusual. That’s assuming the lemmy servers are using some kind of session caching solution.

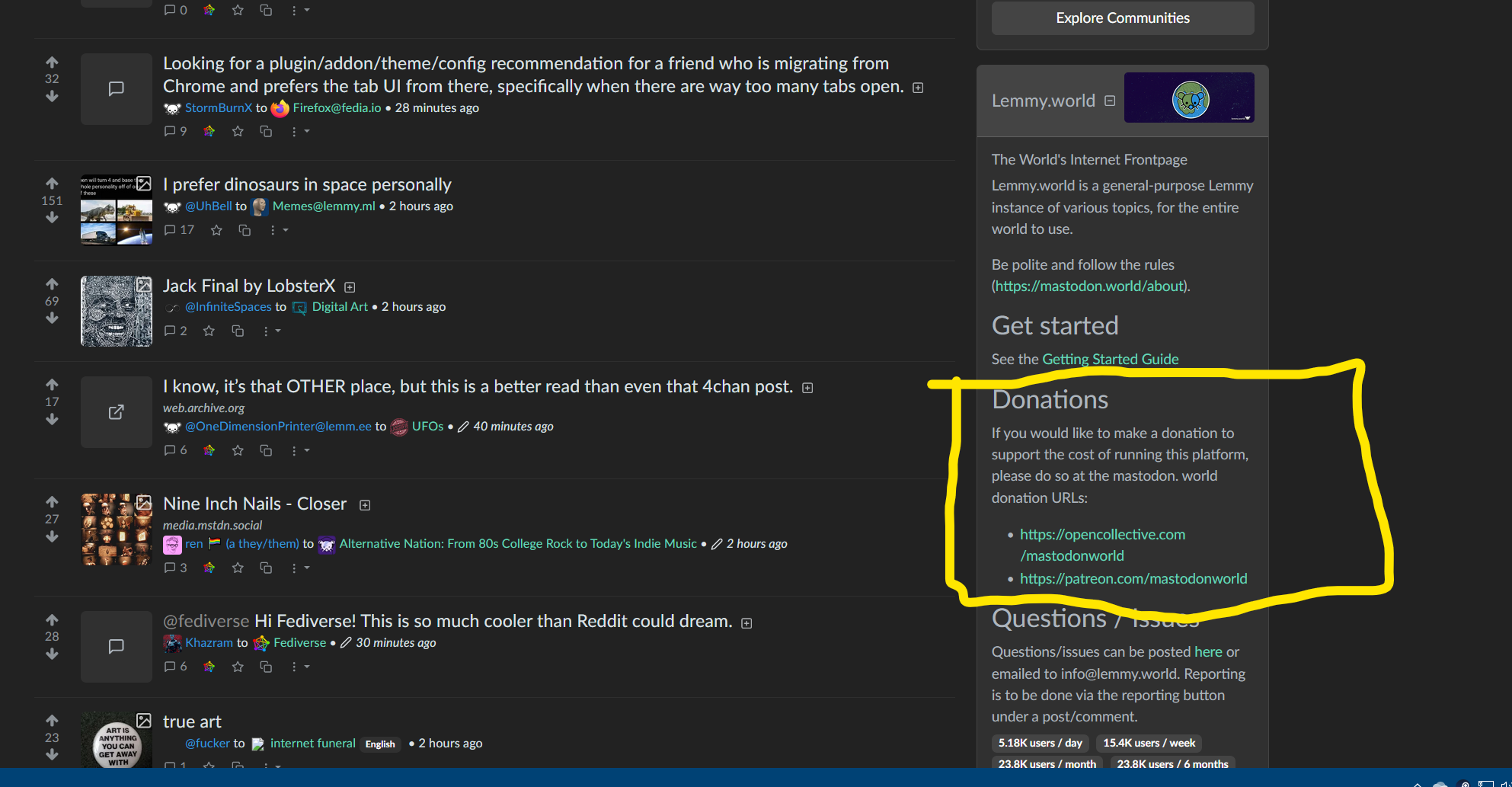

How can I throw some bucks in your direction?

From the lemmy.world front page:

Donations If you would like to make a donation to support the cost of running this platform, please do so at the mastodon.world donation URLs: https://opencollective.com/mastodonworld https://patreon.com/mastodonworldWhere in the frontpage can we see this?

Edit: thank you all!

It’s on the right-hand sidebar of lemmy.world:

Awesome! I’m on mobile, so I cannot see it. Will check it out when I get to my computer.

You can view sidebar on mobile. I think it’s in the three dots, but it’s somewhere!

EDIT: On Jerboa it’s under Community Info, under the three dots. On the mobile web app for L.W. there’s a sidebar button.

Just go to lemmy.world and click sidebar.