deleted by creator

It’s stealing work in a way that functionally launders it

Actually in the age of basically permanent copyrigt, this brings at least some balance

deleted by creator

Don’t ignore the plethora of FOSS models regular people can train and use. They want to trick you into thinking generative models are a game only for the big boys, while they form up to attempt regulatory capture to keep the small guy out. They know they’re not the only game in town, and they’re afraid we won’t need them anymore.

deleted by creator

I’m not sure what you mean. FOSS generative image models are already better than the corpo paid for ones, and it isn’t even close. They’re more flexible and have way more features and tools than what you can get out of a discord bot or cloud computing subscription.

deleted by creator

Thanks for sharing your thoughts. I think I can see why your side, but correct me if I misunderstood.

I don’t think we need primary ownership here. This site doesn’t have primary ownership in the social media market, yet it benefits users. Having our own spaces and tools is always something worth fighting for. Implying we need “primary ownership” is a straw man and emotional language like “massively”, “ridiculous”, and “left holding the bag” can harm this conversation. This is a false dilemma between two extremes: either individuals have primary ownership, or we have no control.

You also downplay the work of the vibrant community of researchers, developers, activists, and artists who are working on FOSS software and models for anyone to use. It isn’t individuals merely participating, it’s a worldwide network working for the public, often times leading research and development, for free.

One thing I’m certain of is that no that one can put a lid on this. What we can do is make it available, effective, and affordable to the public. Mega-corps will have their own models, no matter the cost. Just like the web, personal computers, and smartphones were made by big corporations or governments, we were the ones who turned them into something that enables social mobility, creativity, communication, and collaboration. It got to the point they tried jumping on our trends.

The corporations already have all the data, users literally gave it to them by uploading it. Open source only has scrapped data. If you start regulating, you kill open source but the big players will literally just shrug it off.

Traditional artists already lost. It sucks but now we get to find out if the winner is all of society or only just Adobe and Shutterstock.

Yes, absolutely. They want AI to be people such that copyright applies and such that they can claim the AI was inspired just like a human artist is by the art they’re exposed to.

We need a license model such that AI is only allowed to be trained on content were the license explicitly permits it and that no mention is equal to it being disallowed.

We need a license model such that AI is only allowed to be trained on content were the license explicitly permits it and that no mention is equal to it being disallowed.

That is the default model behind copyright, which basically says that the only things people can use your copyrighted work for without a license are those which are determined to be “fair use”.

I don’t see any way in which today’s AI ought to be considered fair use of other people’s writings, artwork, etc.

The concepts contained within a copyrighted work are not themselves copyrighted. It’s impossible to copyright an idea. Fair use doesn’t even enter into it, you can read a copyrighted work and learn something from it and later use that learning with no restrictions whatsoever.

I feel two ways about it. Absolutely it is recorded in a retrieval system and doing some sort of complicated lookup. So derivative.

On the other hand, the whole idea of copyright or other so called IP except maybe trademarks and trade dress in the most limited way is perverse and should not exist. Not that I have a better idea.

Absolutely it is recorded in a retrieval system and doing some sort of complicated lookup.

It is not.

Stable Diffusion’s model was trained using the LAION-5B dataset, which describes five billion images. I have the resulting AI model on my hard drive right now, I use it with a local AI image generator. It’s about 5 GB in size. So unless StabilityAI has come up with a compression algorithm that’s able to fit an entire image into a single byte, there is no way that it’s possible for this process to be “doing some sort of complicated lookup” of the training data.

What’s actually happening is that the model is being taught high-level concepts through repeatedly showing it examples of those concepts.

I would disagree. It is just a big table lookup of sorts with some complicated interpolation/extrapolation algorithm. Training is recording the data into the net. Anything that comes out is derivative of the data that went in.

You think it’s “recording” five billion images into five billion bytes of space? On what basis do you think that? There have been efforts by researchers to pull copies of the training data back out of neural nets like these and only in the rarest of cases where an image has been badly overfitted have they been able to get something approximately like the original. The only example I know of offhand is this paper which had a lot of problems and isn’t applicable to modern image AIs where the training process does a much better job of avoiding overfitting.

Step back for a moment. You put the data in, say images. The output you got depended on putting in the data. It is derivative of it. It is that simple. Does not matter how you obscure it with mumbo jumbo, you used the images.

On the other hand, is that fair use without some license? That is a different question and one about current law and what the law should be. Maybe it should depend on the nature of the training for example. For example reproducing images from other images that seems less fair. Classifying images by type, well that seems more fair. Lot of stuff to be worked out.

It is that simple.

No, it really isn’t.

If you want to step back, let’s step back. One of the earliest, simplest forms of “generative AI” is the Markov Chain algorithm. What you do with that is you take a large amount of training text and run it through a program to analyze it. What the program is looking for is the probability of specific words following other words.

So for example if it trained on the data “You must be the change you wish to see in the world”, as it scanned through it would first go “ah, the word ‘you’ is 100% of the time followed by the word ‘must’” and then once it got a little further in it would go “wait, now the word ‘you’ was followed by the word ‘wish’. So ‘you’ is followed by ‘must’ 50% of the time and ‘wish’ 50% of the time.”

As it keeps reading through training data, those probabilities are the only things that it retains. It doesn’t store the training data, it just stores information about the training data. After churning through millions of pages of data it’ll have a huge table of words and the associated probabilities of finding other specific words right after them.

This table does not in any meaningful sense “encode” the training data. There’s nothing you can do to recover the training data from it. It has been so thoroughly ground up and distilled that nothing of the original training data remains. It’s just a giant pile of word pairs and probabilities.

It’s similar with how these more advanced AIs train up their neural networks. The network isn’t “memorizing” pictures, it’s learning concepts from them. If you train an image generator on a million images of cats you’re teaching it what cat fur looks like under various lighting conditions, what shape cats generally have, what sorts of environments you usually see cats in, the sense of smug superiority and disdain that cats exude, and so forth. So when you tell the AI “generate a picture of a cat” it is able to come up with something that has a high degree of “catness” to it, but is not actually any specific image from its training set.

If that level of transformation is not enough for you and you still insist that the output must be considered a derivative work of the training data, well, you’re going to take the legal system down an untenable rabbit hole. This sort of learning is what human artists do all the time. Everything is based on the patterns we learn from the examples we saw previously.

‘A big table lookup’ isn’t what’s going on here, go and look up what backpropagation and gradient descent are if you want to know what’s actually happening.

We already knew that. This is just upholding an obvious decision that was really already established long before AI was on the scene. If a human didn’t create it, it can’t be copyrightable.

The problem that the courts haven’t really answered yet is: How much human input is needed to copyright something? 5%? 20%? 50%? 80%? If some AI wrote most of a script and a human writer cleaned it up, is that enough? There is a line, but the courts haven’t drawn that yet.

Also, fuck copyrights. They only benefit the rich, anyway.

Having some form of copyright IMO is good, like you said putting the line somewhere.

Current copyright is fucked (lifetime of the artist plus 70 years)

The way it used to be (14 years) wasn’t bad IMO

Copyrights don’t just benefit the rich, in fact they severely limit what big companies can do with what you create. On the other hand, the current copyright term in most places (70 years after the author’s death) is just ridiculous, and simply guarantees that you will not live to see most content you saw as a kid move into the public domain, while the current owners continue to make money that the original author will never see.

Copyrights don’t just benefit the rich, in fact they severely limit what big companies can do with what you create.

If a big company chose to copy what you created, and you tried to fight it in court, they would bury you in a years-long legal battle that would continue until you ran out of money, quit, or they themselves declared it not worth the money to defend.

Robert Kearns patented the intermittent windshield wiper, which all of the car companies stole, and he sued Ford. From Wikipedia: The lawsuit against the Ford Motor Company was opened in 1978 and ended in 1990. Kearns sought $395 million in damages. He turned down a $30 million settlement offer in 1990 and took it to the jury, which awarded him $5.2 million; Ford agreed to pay $10.2 million rather than face another round of litigation.

Copyrights. Only. Benefit. The. Rich.

Counterexample: the many cases of large companies (Best buy, Cisco, Skype etc) being sued over violations of the GNU GPL. The original authors of the code often get awarded millions in damages because a large company stole their work.

But where do you draw the line between “a human did it using a tool” and “it wasn’t created by a human?”

Generative art exists, and can be copyrighted. Also a drawing made with Photoshop involves a lot of complex filters (some might even use AI!)

I agree on the “fuck copyright” part that said.

The problem that the courts haven’t really answered yet is: How much human input is needed to copyright something? 5%? 20%? 50%? 80%? If some AI wrote most of a script and a human writer cleaned it up, is that enough?

Or perhaps even coming up and writing a prompt is considered enough human input by some.

This is the U.S., we quite literally can’t uphold values to save our lives. Hollywood studios aren’t going to pause shit.

If AI-created art isn’t copyrightable then that means it’s public domain. But it’s entirely possible to create something that is copyrighted using public domain “raw materials”, you just need to do some work with it. And if those “raw materials” are never published, only the copyrighted final product, it’s going to be really hard for anyone else to make use of it. So I don’t really see how this is a big deal, especially not to Hollywood.

You can also copyright the original character and make AI generate all the motions of that character. Since the originals was (human) created and copyrighted, it doesn’t matter that AI created art derived from that character isn’t copyrightable in of itself.

Plus there is also trademarks for character likeness.

All in all, I agree with you, this is a non issue for Hollywood studios.

While cameras generated a mechanical reproduction of a scene, she explained that they do so only after a human develops a “mental conception” of the photo, which is a product of decisions like where the subject stands, arrangements and lighting, among other choices.

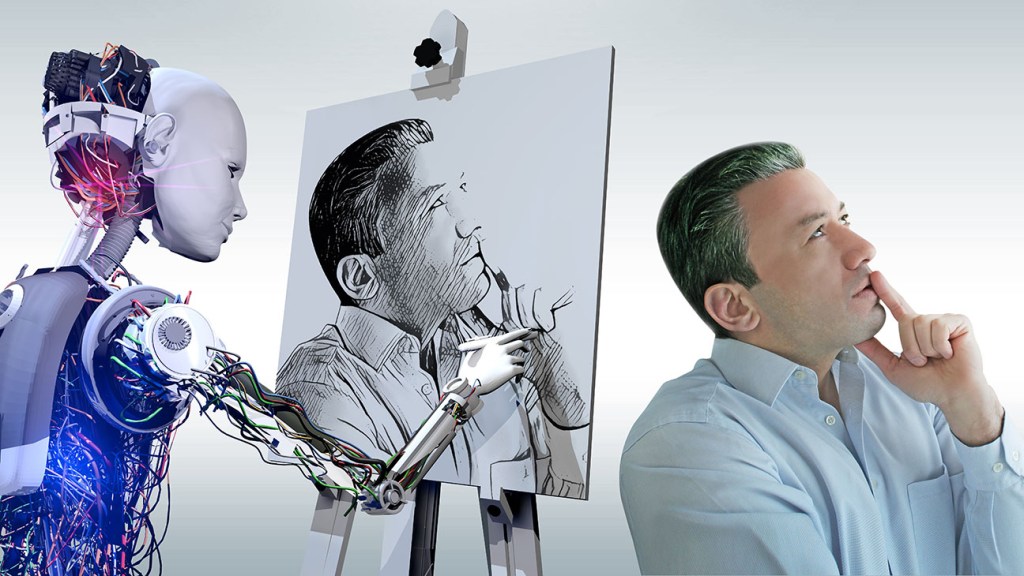

I agree. The types of AIs that we have today are nothing more than mixers of various mental conceptions to create something new. These mental conceptions comes with life experience and is influenced by a person’s world view.

Once you remove this mental conception, will the AIs that we currently have today be able to thrive on their own? The answer is no.

deleted by creator

But this weird, almost religious devotion to some promise of AI and the weird white knighting I see folks do for it is just baffling to watch.

When you look at it through the lens of the latest get-rich-quick-off-some-tech-that-few- people-understand grift, it makes perfect sense.

They naively see AI as a magic box that can make infinite “content” (which of course belongs to them for some reason, and is fair use of other people’s copyrighted data for some reason), and infinite content = infinite money, just as long as you can ignore the fundamentals of economics and intellectual property.

People have invested a lot of their money and emotional energy into AI because they think it’ll make them a return on investment.

they say “well you can’t expect it to be like or treat it like people.” It’s maddening.

Current AI models are 100% static. They do not change, at all. So trying to ascribe any kind of sentience to them or anything going in that direction, makes no sense at all, since the models fundamentally aren’t capable of it. They learn patterns from the world and can mush them together in original ways, that’s neat and might even be a very important step towards something more human-like, but AI is not people, but that’s all they do. They don’t think while you aren’t looking and they aren’t even learning while you are using them. The learning is a complete separate step in these models. Treating them like a person is fundamentally misunderstanding how they work.

But this weird, almost religious devotion to some promise of AI

AI can solve a lot of problems that are unsolvable by any other means. It also has made rapid progress over the last 10 years and seems to continue to do so. So it’s not terribly surprising that there is hype about it.

“can we just stop and think about things for a second before we just unleash them on ourselves?“

Problem with that is, if you aren’t developing AI right now, the competition will. It’s just math. Even if you’d outlaw it, companies would just go to different countries. Technology is hard to stop, especially when it’s clearly a superior solution to the alternatives.

Another problem is that “think about things” so far just hasn’t been very productive. The problems AI can create are quite real, the solutions on the other side much less so. I do agree with Hinton that we should put way more effort into AI safety research, but at the same time I expect that to just show us more ways in which AI can go wrong, without providing anything to prevent it.

I am not terribly optimistic here, just look at how well we are doing with climate change, which is a much simpler problem with much easier solutions, and we are still not exactly close to actually solving it.

When I generate AI art I do so by forming a mental conception of what sort of image I want and then giving the AI instructions about what sort of image I want it to produce. Sometimes those instructions are fairly high-level, such as “a mouse wearing a hat”, and other times the instructions are very exacting and can take the form of an existing image or sketch with an accompanying description of how I’d like the AI to interpret it. When I’m doing inpainting I may select a particular area of a source image and tell the AI “building on fire” to have it put a flaming building in that spot, for example.

To me this seems very similar to photography, except I’m using my prompts and other inputs to aim a camera at places in a latent space that contains all possible images. I would expect that the legal situation will eventually shake out along that line.

This particular lawsuit is about someone trying to assign the copyright for a photo to the camera that took it, which is just kind of silly on its face and not very relevant. Cameras can’t hold copyrights under any circumstances.

This is the Stephen Thaler thing, which is pretty much just a nonsense digression from the real issues of AI art. For some reason Thaler is trying to assert that the copyright for an AI-generated piece of art should belong to the AI itself. This is a non-starter because an AI is not a person in a legal sense, and copyrights can only be held by legal persons. The result of this lawsuit is completely obvious and completely useless to the broader issues.

They really are screwing up the headlines on these articles and giving everyone terrible takes on AI. As if their takes weren’t ignorant enough to begin with.

Yay! Creatives can a decent job and they can eat too now!

I wouldnt say all copyright is bad, but rather copyright law as it is is rife with abuse and bad actors. Big corporations use it like a hammer, and small creators arent able to go up against big corporations if they steal copyrighted work. And copyright/patent trolls are able to shut down anything and everything no questions asked.

There needs to be some way for individuals to have some protections for their work, but the current system aint it. (And this is before AI)

Not much changes with this ruling. The whole thing was about trying to give an AI authorship of a work generated solely by a machine and having the copyright go to the owner of the machine through the work-for-hire doctrine.

deleted by creator

These models are just a collection of observations in relation to each other. We need to be careful not weaken fair use and hand corporations a monopoly of a public technology by making it prohibitively expensive to for regular people to keep developing our own models. Mega corporations already have their own datasets, and the money to buy more. They can also make users sign predatory ToS allowing them exclusive access to user data, effectively selling our own data back to us. Regular people, who could have had access to a corporate-independent tool for creativity, education, entertainment, and social mobility, would instead be left worse off with fewer rights than where they started.

deleted by creator

deleted by creator

deleted by creator

The only thing this ruling really says is “AIs are not legal persons and copyright can only be held by legal persons.” Which is not particularly unexpected or useful when deciding other issues being raised by copyrighting AI outputs.

🤖 I’m a bot that provides automatic summaries for articles:

Click here to see the summary

More than 100 days into the writers strike, fears have kept mounting over the possibility of studios deploying generative artificial intelligence to completely pen scripts.

The ruling was delivered in an order turning down Stephen Thaler’s bid challenging the government’s position refusing to register works made by AI.

Copyright law has “never stretched so far” to “protect works generated by new forms of technology operating absent any guiding human hand,” U.S. District Judge Beryl Howell found.

His complaint argued that the office’s refusal was “arbitrary, capricious, an abuse of discretion and not in accordance with the law” in violation of the Administrative Procedure Act, which provides for judicial review of agency actions.

While cameras generated a mechanical reproduction of a scene, she explained that they do so only after a human develops a “mental conception” of the photo, which is a product of decisions like where the subject stands, arrangements and lighting, among other choices.

In another case, the a federal appeals court said that a photo captured by a monkey can’t be granted a copyright since animals don’t qualify for protection, though the suit was decided on other grounds.

Saved 77% of original text.