It IS a pretty solid suggestion, sandwiches are the best.

lol I saw one for copilot which was along the lines of

“Which sweater should I wear today, red or blue”

“I can’t answer that, what are you going for”

“Hot”

“Pick red because it will make a bold statement”

🙄

That is so dumb it’s almost funny (almost).

It’s a great encapsulation of what AI does: it literally gives you the most average reply possible to any topic, yet people are treating it like some sort of oracle.

Average with a touch of randomness and hallucinations! Don’t forget the best bits.

knuckle sandwich it is then

Psychics are still in business and horoscopes are still in newspapers and they pretty much do the same thing. Must be in human nature to be gullible

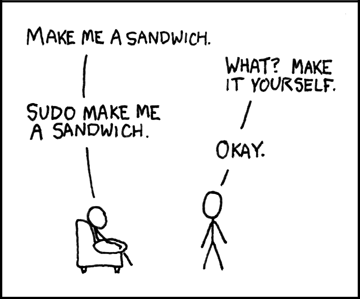

Relevant xkcd

And that’s just the false advertising. The reality is more like:

“Hey ChatGPT, I’m hungry. What should I have for lunch?”

“Uranium contains about 18 million calories…”

Then you ask it for a seahorse emoji and it has a stroke.

omg a sammich that’s crazy

Not sure why it’s offering soup as an option though. It’s 35°C+ at the minute.

How about a carpaccio

“Make sure to eat one small rock a day as well.”

Copying my post from elsewhere

Seattle baseball is doing well this year, and there are so many ChatGPT ads. There’s one where a guy is doing pull-ups. The text shows him asking ChatGPT for help doing pull ups. The response, as printed on the ad, generously typed by me for your convenience:

8-Week Pull-up Progression plan Weekly Schedule

- Day 1 - Strength focus

- Day 2 - Rest or light activity

- Day 3 - Volume focus.

- Day 4 - Core and mobility.

- Day 5- Full-body strength (optional).

Core exercises

- Negative pull-ups - 3x5 (5-10 seconds).

- Assisted pull-ups (bands or a machine) - 3x6-10.

- Inverted rows (under a bar) - 3x8-12.

- Lat pull-downs (if gym access) - 3x10-12.

- Dead hangs - 3x30 seconds

Supporting Work

- Core - Hanging leg raises, planks, and hollow holds.

- Biceps - Hammer curls and chin-ups

- Grip - Farmer’s carries and towel hangs

- Mobility - Scapular shrugs and shoulder abduction.

Tips for Faster Progress

- Do pull-ups when fresh.

- Track your reps weekly.

- Protein and calories matter

Wow, great advice. If I knew what a hammer curl was, I probably wouldn’t need your advice that “calories matter.” Also what does 3x6-10 mean? Reps, sets, and …?

Day 1, “strength focus” what the hell does that mean? You don’t say what strength focus is. You only provided core exercises. How come hanging leg raises and planks from supporting work aren’t listed in the core exercises? If day 2 is a rest day, then what are days 6 and 7?

Like what is the plan? Do they hope you don’t even read the text? Is that why it scrolls so fast?

That is hilarious. I know this would be giving the producers too much credit here but I wish it was malicious compliance on the part. They could have copied any of the thousands of workout/food plans that already exist but chose to use

what I’m sure is chatgpt’s actual garbage reply.[Edit] OK I’m a hater but I need to take that last part back. I asked chatgpt the same question as the video and…it gave me a great reply? A decent lifting routine with explanations, and “bonus tips” on protein/sleep. And then at the end:

If you tell me:

- whether you have gym access or home setup

- your current pull-up ability (can you hang? partial pull-up? full one?)

- and how often you can train

…I’ll tailor this into a week-by-week plan with exact sets/reps and progression tracking.

Wanna do that?

So credit where credit’s due, I guess. The workout routine isn’t anything special but I see the appeal with the followup questions

Mind you, LLMs can be quite inconsistent. If you repeat the same question in new chats, you can easily get a mix of good answers, bad answers, bafflingly insane answers, and “I’m sorry but I cannot support terrorism”.

Yeah, that is definitely true. I just wanted to take back my knee jerk reaction of “OF COURSE CHATGPT WOULD SPIT OUT SOMETHING SO STUPID” after being proven wrong.

I tried it both on my phone and work PC and got different but equally decent replies. Nothing you couldn’t find elsewhere without boiling the planet, though

That’s the true danger of the tools, imo. They aren’t the digital All Seers their makers want to market them as, but they also aren’t the utterly useless slop machines the consensus on the Fediverse appears to vehemently be.

Of course they’re somewhere in-between. Spicy auto complete sounds like a put down but there is spice. I prefer to think of LLMs as word calculators, and I do mean that I have found them analogous in the sense that if you approach them with a similar plan of action as you would an actual calculator, you’ll get similar results.

Difference is of course that math is not fuzzy and open to nuance, and 1+1 will always equal 2.

But language isn’t math, and it’s trickier to get a sense for what the “equations” that will yield results are, so it’s easy to disparage the technology as a concept given (as you rightly point out) the boiling of the planet.

Work has been insisting we tool with an LLM and they’re checking but thankfully my role doesn’t require relying on any facts the machine spits out.

Which is another part of why the technology is so reviled/misunderstood; the part of the LLM that determines the next word isn’t and can’t judge the veracity of it’s output. Any landing on factual info is either a coincidence or the fact that you as the user knew to “coach” things in such a way as to arrive as the most likely output which the user already knew is correct.

Because of the uncertainty, it is simply unwise to take any LLM’s output as factual, as any fact checking capacity isn’t innate but other operations being done on the output, if any.

Then there’s all the other reasons to hate the things like who makes them, how they’re made, how they’re wielded, etc, and I frankly can’t blame anyone for vehemently hating LLM’s as a concept.

But it’s disingenous to think the tech is wholly incapable of anything of merit to anyone and only idiots out there are using it (even if that may still be often the case).

Or put another way: A sailor hidden within the ship’s hold will still drown and die alongside the ones that don’t resist the siren call above and pilot straight for the rocks.

Cool stuff, deployed in maximally foolish ways. I think I rambled a bit there, but hopefully a bit of my point made it across.

And this is the response that they thought was good enough to put in an ad

Why bother fucking around with chatgpt, it’s an ad. They probably combed over it and adjusted the text.

I just assumed it was from chatgpt because it’s so shit. Surely they’d come up with something better if they were going to fake it…

I’m certainly not going to pretend that this feels like a well laid out and clear training plan, because it’s not. But a couple of your specific objections don’t really work for me.

If I knew what a hammer curl was

I would not expect a training plan to explain this. The person can consult a separate document (ideally a real trainer, but videos are a decent secondary option, and as a distant last resort they could ask the AI to describe it) to learn how to do a hammer curl.

Also what does 3x6-10 mean?

I would interpret this as 3 sets of between 6 and 10 reps each. It’s giving you room to adapt the plan to what feels right. The use of a hyphen or en dash seems pretty standard to me, a person who is vaguely familiar with the use of numbers in English.

Your penultimate paragraph is the one that really hits the mark for me. It’s almost like each of the bullet lists and headings are created on their own, without regard for what the others contains. It’s not a cogent training plan. It has a bunch of individual pieces that kinda make sense as components of a plan, but it’s like you took a jigsaw puzzle and forceably jammed together pieces of it that are obviously from the same general area of the puzzle, but aren’t meant to be actual neighbours.

I’ve tried this before but the AI is very resistant to making choices for the user. The actual response is typically something like “There are many different things you could have for lunch! You should choose something to balance important factors like cost, health, speed, and taste…”

wow.

such food.

much exercise

Every home assistant ad is like “Alexa, 55 BURGERS, 55 FRIES, 55 TACOS, 55 PIES, 55 COKES, 100 TATER TOTS, 100 PIZZAS, 100 TENDERS, 100 MEATBALLS, 100 COFFEES, 55 WINGS, 55 SHAKES, 55 PANCAKES, 55 PASTAS, 55 PEPPERS AND 155 TATERS”

And my Alexa orders it.

How does it know I like sandwich? Amazing.

Wow, you like sandwich, too? We should hang out!

if i can pick the sandwich place. i’m very picky about bread.

They really do think the consumer is that dumb or maybe they want them to be that dumb?

Babification using AI Enshitification?

I mean AI customers ARE exactly that dumb.

Is it still enshittification if it started out shitty?

There can always be more shittification if the shareholders demand it.

How would enshittification create babification? If the product is less good them people rely on it less.

deleted by creator