Why would an RTX 4090 make Python faster?

Don’t worry this post was written by a first year computer science student who just learned about C. No need to look too closely at it.

Rust is better.

The only language worth discussing is brainfuck

Purest of the programming languages

Joke’s on you, he was talking about “Phyton”. /s

I bet an LLM could have written this meme without making that mistake.

Embarrassing.

The new favorite language of AAA game studios:

PhytonPythonWell, it just demostrate how slow is unoptimized software

If you create a meme like this at least inform yourself on how computers and software work.

If 4090 is not making stuff faster then why my games run faster with it? /s

Easy, your 4090 is made of pure assembly.

Because game need marketing to run

They apparently like making memes shitting on people & things without really understanding why, like the one for the NVidia CEO

Screaming at my single-threaded, synchronous web scraper “Why are you so slow, I have a 4090!”

What makes you think python is in optimized and bloated?

Did you know vast majority of AI development happening right now is on python? The thing that literally consumes billions of dollars of even-beefier-than-4090 GPUs like A100. Don’t you think if they could do this more efficiently and better on C or assembly, they would do it? They would save billions.

Reality is that it makes no benefit to move away from python to lower level languages. There is no poor optimization you seek. In fact if they were to try this in lower level languages, they’ll take even longer to optimize and yield worse results.

TBF, using AI as an example isn’t the best choice when it consumes an ungodly amount of power.

It makes the best example because there’s that much more money to be saved.

I’m happy if it’s actually running in python and not a javascript app with electron.

Idk, it’s rare for an electron app to literally not even run. Meanwhile I’m yet to encounter a python app that doesn’t require me to Google what specific environment the developer had and recreate it.

I think with pyenv and pipenv/UV you can create pretty reliable packaging. But it’s not as common as electron, so it’s a pain.

That’s fair.

With a properly packaged python app, you shouldn’t even notice you’re running a python app. But yeah, for some reason there’s a lot of them that … aren’t.

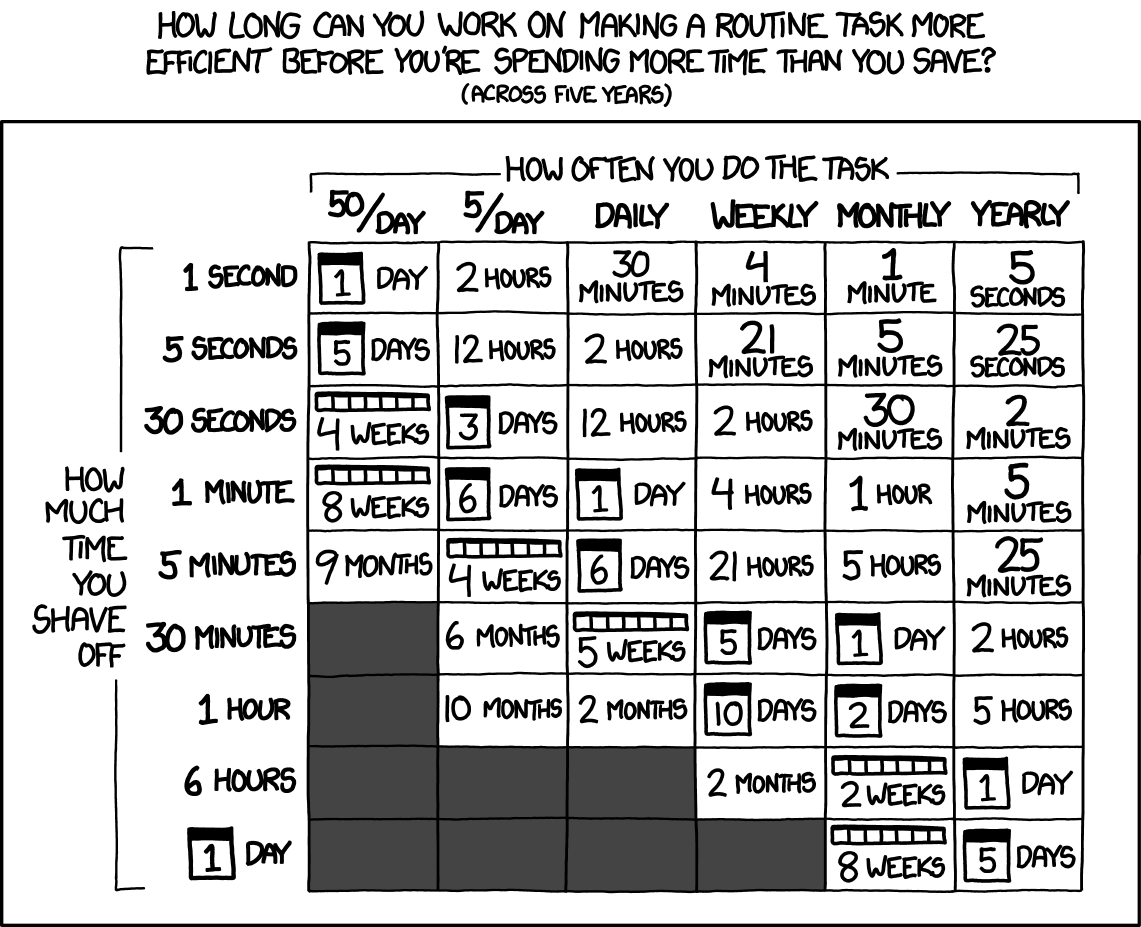

Ah yes, those precious precious CPU cycles. Why spend one hour writing a python program that runs for five minutes, if you could spend three days writing it in C++ but it would finish in five seconds. Way more efficient!

Because when it is to actually get paid work done, all the bloat adds up and that 3 days upfront could shave weeks/months of your yearly tasks. XKCD has a topic abut how much time you can spend on a problem before effort outweighs productivity gains. If the tasks are daily or hourly you can actually spend a lot of time automating for payback

And note this is one instance of task, imagine a team of people all using your code to do the task, and you get a quicker ROI or you can multiply dev time by people

That also goes to show why to not waste 3 days to shave 2 seconds off a program that gets run once a week.

Agreed. Or look at the manual effort, is it worth coding it, or just do it manually for one offs. A coworker would code a bunch of mundane tasks for single problems, where I would check if it actually will save time or I just manually manipulate the data myself.

You can write perfectly well structured and maintainable code in Python and still be more productive than in other languages.

This site has good benchmarking of unoptimized and optimized code for several languages. C+ blows Python away. https://benchmarksgame-team.pages.debian.net/benchmarksgame/index.html

SDLC can be made to be inefficient to maximize billable hours, but that doesn’t mean the software is inherently badly architected. It could just have a lot of unnecessary boilerplate that you could optimize out, but it’s soooooo hard to get tech debt prioritized on the road map.

Killing you own velocity can be done intelligently, it’s just that most teams aren’t killing their own velocity because they’re competent, they’re doing it because they’re incompetent.

And note this is one instance of task, imagine a team of people all using your code to do the task, and you get a quicker ROI or you can multiply dev time by people

In practice, is only quicker ROI if your maintenance plan is nonexistent.

Welp, microcontrollers say hi

Welp, I’m not saying you should use Python for everything. But for a lot of applications, developer time is the bottleneck, not computing resources.

So, I’ve noticed this tendency for Python devs to compare against C/C++. I’m still trying to figure out why they have this tendency, but yeah, other/better languages are available. 🙃

exactly! i prefer python or ruby or even java MUCH more than assembly and maybe C

I mean, I’d say it depends on what you do. When I see grad students writing numeric simulations in python I do think that it would be more efficient to learn a language that is better suited for that. And I know I’ll be triggering many people now, but there is a reason why C and Fortran are still here.

But if it is for something small, yeah of course, use whatever you like. I do most of my stuff in R and R is a lot of things, but not fast.

But if it is for something small, yeah of course, use whatever you like.

or if you have a deadline and using something else would make you miss that deadline.

I know it makes me sound like an of man shouting at clouds but the other day I installed Morrowind and was genuinely blown away by how smooth and reliable it ran and all the content in the game fitting in 2gb of space. Skyrim requires I delete my other games to make room and still requires a whole second game worth of mods to match the stability and quantity of morrowind.

High res textures (especially normal maps) and higher quality/coverage audio really made game sizes take off. Unreal’s new “Nanite” tech, where models can have literally billions of polygons, actually reduces game size because no normal maps.

Back in the day morrowind was unoptimised too, https://kotaku.com/morrowind-completely-rebooted-your-xbox-during-some-loa-1845158550

That’s fair, though honestly the only issue I ever had on the Xbox was having a loading screen every 5 minutes.

Yes, but also community rewrite of the Morrowind engine, to make it even more better: https://openmw.org/

Admittedly, some changes might make it use more resources, for example it’s got basically no loading screens, because nearby cells get loaded before you enter them…

I’m actually giving that a try now because it comes packaged with bazzite! I just wish I could figure out modding lol

“Python is bloat” wait until you look at NodeJS “node_modules” folder

Phyton

Love you homie 💋 walks away

Armatures, I only write software using my hammer by punching holes in steel plates.

amateurs, i write my software with a magnetized needle and a steady hand

It used to be pretty terrible, but the frameworks are getting there, starting with the languages they are based on.

Believe it or not, Java has been optimized a ton and can be written to be very efficient these days. Another great example of a high-level, high-efficiency language is Julia. And then there is Rust of course, which basically only sacrifices memory-efficiency for C-speeds with Python-esque comfort. It’s getting better.

plus all the spying and the “telemetry” bs

Love phyton

No Phyton, Jiverscrap is best.

Tbh this all seems to be related to following principles like Solid or following software design patterns. There’s a few articles about CUPID, SOLID performance hits, etc

- it all suggests that following software design patterns cost about a decade of hardware progress.

Absolutely not lol.

If SOLID is causing you performance problems, it’s likely completely solvable.

Most companies throwing out shitty software have engineers who couldn’t tell you what SOLID is without looking it up.

Most people who use this line of reasoning don’t have an actual understanding of how often patterns are applied or misapplied in the industry and why.

SOLID might be a bottle neck for software that needs to be real-time compliant with stable jitter and ultra-low latency, the vast majority of apps are just spaghetti code.

true…

“bloat” is just short for “your computer sucks”.

Dump your peasant tier shit and go fill up that 42U rack.no u