Ok let’s give a little bit of context. I will turn 40 yo in a couple of months and I’m a c++ software developer for more than 18 years. I enjoy to code, I enjoy to write “good” code, readable and so.

However since a few months, I become really afraid of the future of the job I like with the progress of artificial intelligence. Very often I don’t sleep at night because of this.

I fear that my job, while not completely disappearing, become a very boring job consisting in debugging code generated automatically, or that the job disappear.

For now, I’m not using AI, I have a few colleagues that do it but I do not want to because one, it remove a part of the coding I like and two I have the feeling that using it is cutting the branch I’m sit on, if you see what I mean. I fear that in a near future, ppl not using it will be fired because seen by the management as less productive…

Am I the only one feeling this way? I have the feeling all tech people are enthusiastic about AI.

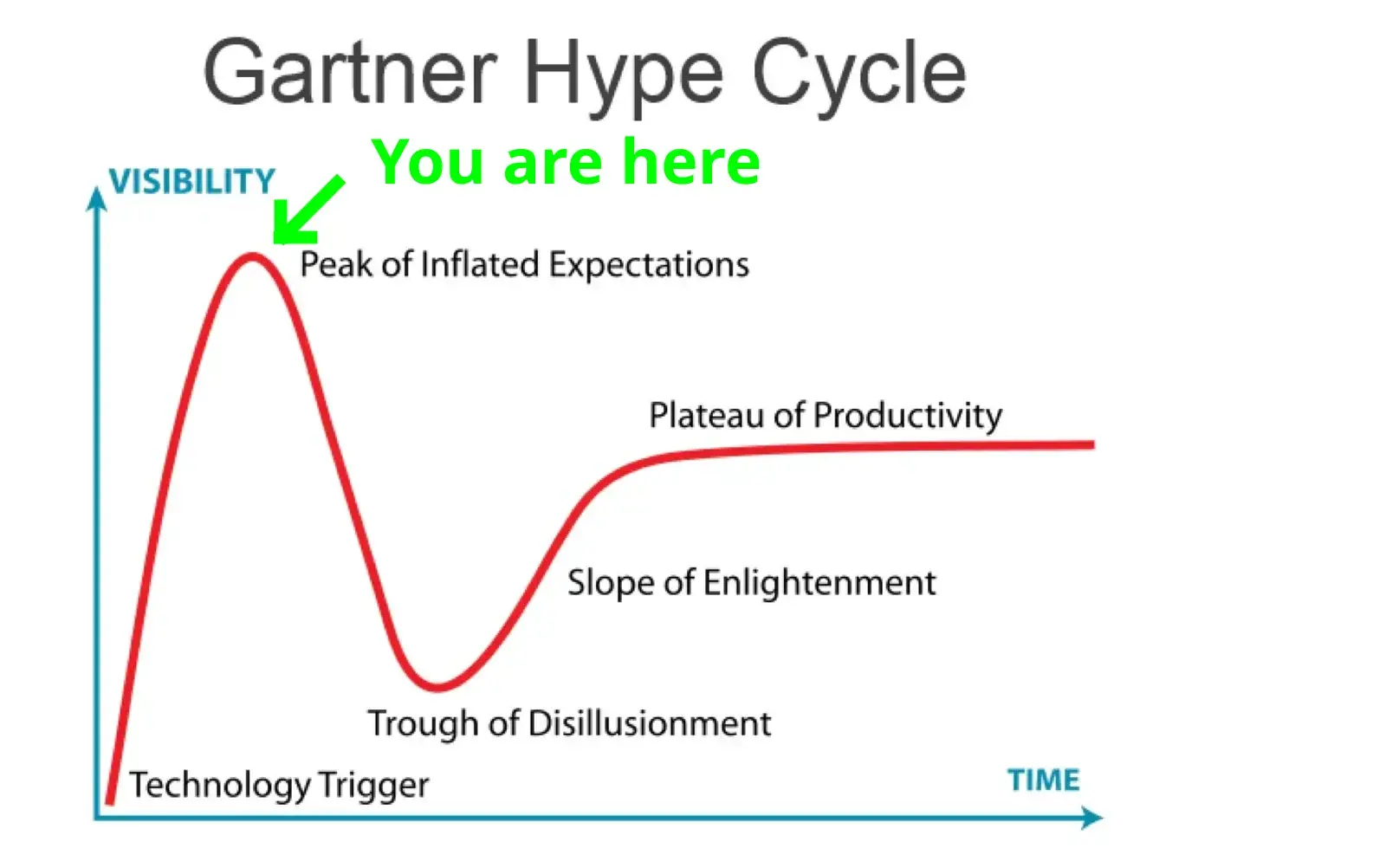

The trough of disillusionment is my favorite.

Kind of nice to see NFTs breaking through the floor at the trough of disillusionment, never to return.

Betteridge’s law of headlines: No.

Currently at the crossroads between trough of disillusionment and slope of enlightenment

I’m not worried about AI replacing employees

I’m worried about managers and bean counters being convinced that AI can replace emplpyees

It’ll be like outsourcing all over again. How many companies outsourced then walked back on it several years later and only hire in the US now? It could be really painful short term if that happens (if you consider severeal years to a decade short term).

Given the degree to which first-level customer service is required to stick to a script, I could see over half of call centers being replaced by LLMs over the next 10 years. The second level service might still need to be human, but I expect they could be an order of magnitude smaller than the first tier.

They’re supposed to be on script but customers veer off the script constantly. They would be extremely annoyed to be talking to AI. Not that it would stop some companies but it would be terrible customer service.

That’s what tier 2 service would be for. But the vast majority of calls are people wanting to execute a simple order or transaction, or ask a silly question they could have googled.

If your problem can be solved by a bot, and it means you can be done immediatelu and don’t need to be on hold for 20m+ waiting for t2 support, you’re going to prefer it.

Also, we’ve come a long way in just 2-3 years. It will be very difficult for us to talk about how good the experience will be in 5-10 years.

If your problem can be solved by a bot, then an old fashioned touch-tone phone menu would be an entirely sufficient solution, no “AI” needed.

If not, then plugging an LLM into your IVR will never be worth the expense since the customer will need to talk to a human anyway.

“AI” is a bubble. Sure, it might have some niche applications where its viable, but it’s heavily overpromised and due for disinvestment this year.

And yet, we don’t use touch-tone menus, bots that suck are already commonplace. An LLM bot could stand to dramatically improve the user experience, and would probably use the same resources that the current bots do.

Simple things like “I want to fill a prescription” or “I want to schedule a technician” or “do you have blah in stock” could be orchestrated by a bot that sounds human, and people would prefer that to traversing a directory tree for 10m.

I don’t even want to think about how someone would implement a customer facing inventory query using a touch-tone interface, let alone use that.

I fail to see how adding an LLM to an IVR could improve that situation. Keywords like “fill perscription”, “schedule technician”, and “do you have [blank] in stock” are already present and don’t need any kind of text generation to shunt a caller into the appropriate queue or run a query on a warehouse database.

Where, exactly, do you think an LLM could contribute other than, like, a computer generated bedtime story hotline or something?

I was a supervisor of a call center up until recently and yea, this is definitely coming. It’s was already to the point where they were arguing with me about hiring enough people because soon we’ll have an AI solution to take a lot of the calls. You can already see it in the chat bots coming out.

Copilot is just so much faster than me at generating code that looks fancy and also manages to maximize the number of warnings and errors.

That will happen. And if they’re wrong, they’ll crash and burn. That’s how tech bubbles burst.

Clearly my main concern… But after reading a lot of reinsuring comments, I’m more and more convinced that human will always be superior

This is their only retaliation for the fact that managers have already been replaced by git tools and CI.

So, I asked Chat GPT to write a quick PowerShell script to find the number of months between two dates. The first answer it gave me took the number of days between them and divided by 30. I told it, it needs to be more accurate than that, so it wrote a while loop to add 1 months to the first date until it was larger than the 2 second date. Not only is that obviously the most inefficient way to do it, but it had no checks to ensure the one in the loop was actually smaller so you could just end up with zero. The results I got from co-pilot were not much better.

From my experience, unless there is existing code to do exactly what you want, these AI are not to the level of an experienced dev. Not by a long shot. As they improve, they’ll obviously get better, but like with anything you have to keep up and adapt in this industry or you’ll get left behind.

The thing is that you need several AIs. One to write the question so the one who codes gets the question you want answered. The. A third one who will write checks and follow up on the code written.

When ran in a feedback loop like this, the quality you get out will be much higher than just asking chathpt to make something

This is the idea behind things like “chain of thought” and “tree of thought”.

Removed by mod

This is a real danger in a long term. If advancement of AI and robotics reaches a certain level, it can detach big portion of lower and middle classes from the societys flow of wealth and disrupt structures that have existed since the early industrial revolution. Educated common man stops being an asset. Whole world becomes a banana republic where only Industry and government are needed and there is unpassable gap between common people and the uncaring elite.

Right. I agree that in our current society, AI is net-loss for most of us. There will be a few lucky ones that will almost certainly be paid more then they are now, but that will be at the cost of everyone else, and even they will certainly be paid less then the share-holders and executives. The end result is a much lower quality of life for basically everyone. Remember what the Luddites were actually protesting and you’ll see how AI is no different.

This is exactly what I see as the risk. However, the elites running industry are, on average, fucking idiots. So, we have been seeing frequent cases of them trying to replace people whose jobs they don’t understand, with technology that even leading scientists don’t fully understand, in order to keep those wages for themselves, all in-spite of those who do understand the jobs saying that it is a bad idea.

Don’t underestimate the willingness of upper management to gamble on things and inflict the consequences of failure on the workforce. Nor their willingness to switch to a worse solution, not because it is better or even cheaper but because it means giving less to employees, if they think that they can get away with it.

White collar never should have been getting paid so much more than blue collar and I welcome seeing the Shift balance out, so everyone wants to eat the rich.

White collar never should have been getting paid so much more than blue collar

Actually I see that the other way around. Blue collar should have never been paid so much less than white collar.

Rich will have weapons and technology. I see 1984 + hunger games scenario more likely.

I’m in IT and I don’t believe this will happen for quite a while if at all. That said I wouldn’t let this keep you up at night, it’s out of your control and worrying about it does you no favours. If AI really can replace people then we are all in this together and we will figure it out.

AI is a really bad term for what we are all talking about. These sophisticated chatbots are just cool tools that make coding easier and faster, and for me, more enjoyable.

What the calculator is to math, LLM’s are to coding, nothing more. Actual sci-fi style AI, like self aware code, would be scary if it was ever demonstrated to even be possible, which it has not.

If you ever have a chance to use these programs to help you speed up writing code, you will see that they absolutely do not live up to the hype attributed to them. People shouting the end is nigh are seemingly exclusively people who don’t understand the technology.

I’ve never had to double check the results of my calculator by redoing the problem manually, either.

Yeah, this is the thing that always bothers me. Due to the very nature of them being large language models, they can generate convincing language. Also image “ai” can generate convincing images. Calling it AI is both a PR move for branding, and an attempt to conceal the fact that it’s all just regurgitating bits of stolen copywritten content.

Everyone talks about AI “getting smarter”, but by the very nature of how these types of algorithms work, they can’t “get smarter”. Yes, you can make them work better, but they will still only be either interpolating or extrapolating from the training set.

Haven’t we started using AGI, or artificial general intelligence, as the term to describe the kind of AI you are referring to? That self aware intelligent software?

Now AI just means reactive coding designed to mimic certain behaviours, or even self learning algorithms.

That’s true, and language is constantly evolving for sure. I just feel like AI is a bit misleading because it’s such a loaded term.

I get what you mean, and I think a lot of laymen do have these unreasonable ideas about what LLMs are capable of, but as a counter point we have used the label AI to refer to very simple bits of code for decades eg video game characters.

As an example:

Salesforce has been trying to replace developers with “easy to use tools” for a decade now.

They’re no closer than when they started. Yes the new, improved flow builder and omni studio look great initially for the simple little preplanned demos they make. But theyre very slow, unsafe to use and generally are impossible to debug.

As an example: a common use case is: sales guy wants to create an opportunity with a product. They go on how omni studio let’s an admin create a set of independently loading pages that let them:

• create the opportunity record, associating it with an existing account number.

• add a selection of products to it.But what if the account number doesn’t exist? It fails. It can’t create the account for you, nor prompt you to do it in a modal. The opportunity page only works with the opportunity object.

Also, if the user tries to go back, it doesn’t allow them to delete products already added to the opportunity.

Once we get actual AIs that can do context and planning, then our field is in danger. But so long as we’re going down the glorified chatbot route, that’s not in danger.

They haven’t replaced me with cheaper non-artifical intelligence yet and that’s leaps and bounds better than AI.

Yeah, the real danger is probably that it will be harder for junior developers to be considered worth the investment.

I’m a composer. My facebook is filled with ads like “Never pay for music again!”. Its fucking depressing.

Good thing there’s no Spotify for sheet music yet… I probably shouldn’t give them ideas.

If you are afraid about the capabilities of AI you should use it. Take one week to use chatgpt heavily in your daily tasks. Take one week to use copilot heavily.

Then you can make an informed judgement instead of being irrationally scared of some vague concept.

Yeah, not using it isn’t going to help you when the bottom line is all people care about.

It might take junior dev roles, and turn senior dev into QA, but that skillset will be key moving forward if that happens. You’re only shooting yourself in the foot by refusing to integrate it into your work flows, even if it’s just as an assistant/troubleshooting aid.

It’s not going to take junior dev roles) it’s going to transform whole workflow and make dev job more like QA than actual dev jobs, since difference between junior middle and senior is often only with scope of their responsibility (I’ve seen companies that make junior do fullstack senior job while on the paper they still was juniors and paycheck was something between junior and middle dev and these companies is majority in rural area)

Programming is the most automated career in history. Functions / subroutines allow one to just reference the function instead of repeating it. Grace Hopper wrote the first compiler in 1951; compilers, assemblers, and linkers automate creating machine code. Macros, higher level languages, garbage collectors, type checkers, linters, editors, IDEs, debuggers, code generators, build systems, CI systems, test suite runners, deployment and orchestration tools etc… all automate programming and programming-adjacent tasks, and this has been going on for at least 70 years.

Programming today would be very different if we still had to wire up ROM or something like that, and even if the entire world population worked as programmers without any automation, we still wouldn’t achieve as much as we do with the current programmer population + automation. So it is fair to say automation is widely used in software engineering, and greatly decreases the market for programmers relative to what it would take to achieve the same thing without automation. Programming is also far easier than if there was no automation.

However, there are more programmers than ever. It is because programming is getting easier, and automation decreases the cost of doing things and makes new things feasible. The world’s demand for software functionality constantly grows.

Now, LLMs are driving the next wave of automation to the world’s most automated profession. However, progress is still slow - without building massive very energy expensive models, outputs often need a lot of manual human-in-the-loop work; they are great as a typing assist to predict the next few tokens, and sometimes to spit out a common function that you might otherwise have been able to get from a library. They can often answer questions about code, quickly find things, and help you find the name of a function you know exists but can’t remember the exact name for. And they can do simple tasks that involve translating from well-specified natural language into code. But in practice, trying to use them for big complicated tasks is currently often slower than just doing it without LLM assistance.

LLMs might improve, but probably not so fast that it is a step change; it will be a continuation of the same trends that have been going for 70+ years. Programming will get easier, there will be more programmers (even if they aren’t called that) using tools including LLMs, and software will continue to get more advanced, as demand for more advanced features increases.

AI powered spreadsheets are going to be the next big technology for programmers :D

You’re certainly not the only software developer worried about this. Many people across many fields are losing sleep thinking that machine learning is coming for their jobs. Realistically automation is going to eliminate the need for a ton of labor in the coming decades and software is included in that.

However, I am quite skeptical that neural nets are going to be reading and writing meaningful code at large scales in the near future. If they did we would have much bigger fish to fry because that’s the type of thing that could very well lead to the singularity.

I think you should spend more time using AI programming tools. That would let you see how primitive they really are in their current state and learn how to leverage them for yourself. It’s reasonable to be concerned that employees will need to use these tools in the near future. That’s because these are new, useful tools and software developers are generally expected to use all tooling that improves their productivity.

I think you should spend more time using AI programming tools. That would let you see how primitive they really are in their current state and learn how to leverage them for yourself.

I agree, sosodev. I think it would be wise to at least be aware of modern A.I.'s current capabilities and inadequacies, because honestly, you gotta know what you’re dealing with.

If you ignore and avoid A.I. outright, every new iteration will come as a complete surprise, leaving you demoralized and feeling like shit. More importantly, there will be less time for you to adapt because you’ve been ignoring it when you could’ve been observing and planning. A.I. currently does not have that advantage, OP. You do.

As someone with deep knowledge of the field, quite frankly, you should now that AI isn’t going to replace programmers. Whoever says that is either selling a snake oil product or their expertise as a “futurologist”.

Could you elaborate? I don’t have a deep knowledge of the field, I only write rudimentary scripts to make some ports of my job easier, but from the few videos on the subject that I saw, and from the few times I asked AI to write a piece of code for me, I’d say I share the OP’s worry. What would you say is something that humans add to programming that can’t (and can never be) replaced by AI?

I think the need for programmers will always be there, but there might be a transition towards higher abstraction levels. This has actually always been happening: we started with much focus on assembly languages where we put in machine code, but nowadays a much less portion of programmers are involved in those and do stuff in python, java or whatever. It is not essential to know stuff about garbage collection when you are writing an application, because the compiler already does that for you.

Programmers are there to tell a computer what to do. That includes telling a computer how to construct its own commands accordingly. So, giving instructions to an AI is also programming.

Yeah that’s what I was just thinking. Once we somehow synthesize this LLM into a new type of programming language it gets interesting. Maybe a more natural language that gets the gist of what you are trying to do. And then a unit test to see if it works. And then you verify. Not sure if that can work.

TBH I’m a bit shocked that programmers are already using AI to generate programming, I only program as a hobby any more. But it sounds interesting. If I can get more of my ideas done with less work I’d love it.

I think fundamentally, philosophically there are limits. Ultimately you need language to describe what you want to do. You need to understand the problem the “customer” has and formulate a solution and then break it down into solvable steps. AI could help with that but fundamentally it’s a question of describing and the limits of language.

Or maybe we’ll see brain interfaces that can capture some of the subtleties of intend from the programmer.

So maybe we’ll see the productivity of programmers rise by like 500% or something. But something tellse me (Jevons paradox) the economy would just use that increased productivity for more apps or more features. But maybe the needed qualifications for programmers will be reduced.

Or maybe we’ll see AI generating programming libraries and development suits that are more generalized libraries. Or like existing crusty libraries rewritten to be more versatile and easier to use by AI powered programmers. Maybe AI could help us create a vast library of more abstract / standard problem+solutions.

Generative neural networks are the latest tech bubble, and they’ll only be decreasing in quality from this point on as the human-generated text used to train them becomes more difficult to access.

One cannot trust the output of an LLM, so any programming task of note is still going to require a developer for proofreading and bugfixing. And if you have to pay a developer anyway, why bother paying for chatgpt?

It’s the same logic as Tesla’s “self-driving” cars, if you need a human in the loop then it isn’t really automation, just sparkling cruise control that isn’t worth the price tag.

I’m really looking forward to the bubble popping this year.

This year? Bold prediction.

It can’t reason. It can’t write novel high quality, high complexity code. It can only parrot what other had said.

90% of code is something already solved elsewhere though.

AI doesn’t know if the code copied is correct. It will stright up hallucinate non existing libraries just because they seem to look good at first glance.

Depends on how you set it. A RAG LLM verifies up against a set of sources, so that would be very unlikely in state of the art.

Thought about this some more so thought I’d add a second take to more directly address your concerns.

As someone in the film industry, I am no stranger to technological change. Editing in particular has radically changed over the last 10 to 20 years. There are a lot of things I used to do manually that are now automated. Mostly what it’s done is lower the barrier to entry and speed up my job after a bit of pain learning new systems.

We’ve had auto-coloring tools since before I began and colorists are still some of the highest paid folks around. That being said, expectations have also risen. Good and bad on that one.

Point is, a lot of times these things tend to simplify/streamline lower level technical/tedious tasks and enable you to do more interesting things.

i’m still in uni so i can’t really comment about how’s the job market reacting or is going to react to generative AI, what i can tell you is it has never been easier to half ass a degree. any code, report or essay written has almost certainly came from a LLM model, and none of it makes sense or barely works. the only people not using AI are the ones not having access to it.

i feel like it was always like this and everyone slacked as much as they could but i just can’t believe it, it’s shocking. lack of fundamental and basic knowledge has made working with anyone on anything such a pain in the ass. group assignments are dead. almost everyone else’s work comes from a chatgpt prompt that didn’t describe their part of the assignment correctly, as a result not only it’s buggy as hell but when you actually decide to debug it you realize it doesn’t even do what its supposed to do and now you have to spend two full days implementing every single part of the assignment yourself because “we’ve done our part”.

everyone’s excuse is “oh well university doesn’t teach anything useful why should i bother when i’m learning <insert js framework>?” and then you look at their project and it’s just another boilerplate react calculator app in which you guessed it most of the code is generated by AI. i’m not saying everything in college is useful and you are a sinner for using somebody else’s code, indeed be my guest and dodge classes and copy paste stuff when you don’t feel like doing it, but at least give a damn on the degree you are putting your time into and don’t dump your work on somebody else.

i hope no one carries this kind of sentiment towards their work into the job market. if most members of a team are using AI as their primary tool to generate code, i don’t know how anyone can trust anyone else in that team, which means more and longer code reviews and meetings and thus slower production. with this, bootcamps getting more scammy and most companies giving up on junior devs, i really don’t think software industry is going towards a good direction.

I think I will ask people if they use AI to write code when I am interviewing them for a job and reject anyone who does.